BC

BC

What Is A Computer

July 29th 2024Computers are magical things at the center of how we think and interact with the world. But what are they?

In this blog I (attempt) to make three cases:

- That computers are ancestors of very basic technologies from the 1800s and before.

- Making sense of them is easier than you may think.

- The change in computers since their inception can be attributed to changes in speed, not changes in fundamentals.

This is not an article about what computers have brought to society or an article about what they may or may not bring in the future, it is an article about what they are and how they function.

You're (probably not) An Idiot

I believe the only idiot(s) in the room (so sad when there is more than one :) are the people who underestimate their audience as unable to grasp the conceptual nature of computing (and those of you who would rather hide).

Consider how incredibly insane it is that so many books in the world are created from a small set of 26 letters from the latin alphabet, some spacing, and about 8 punctuation symbols. If your having a hard time wrapping your mind around why computers can do so many different things. I'm not sure I fully grasp how so many books can be written in so many different ways, but I'd never say I didn't understand how a book was written? Being amazed and being confused are very seperate things.

I will share that with all I've done with computers, this concept of a huge variety of composition from a small set of tokens is the same for computing as it is for any other written format, such as the english language or mathematics, and while it is never easy to understand the majesty of it, the mechanics are relativly easy to understand when you break them down.

From BC to 1800AD to Now

Here are some ideas of what the term Computer has meant over time.

BC

BC

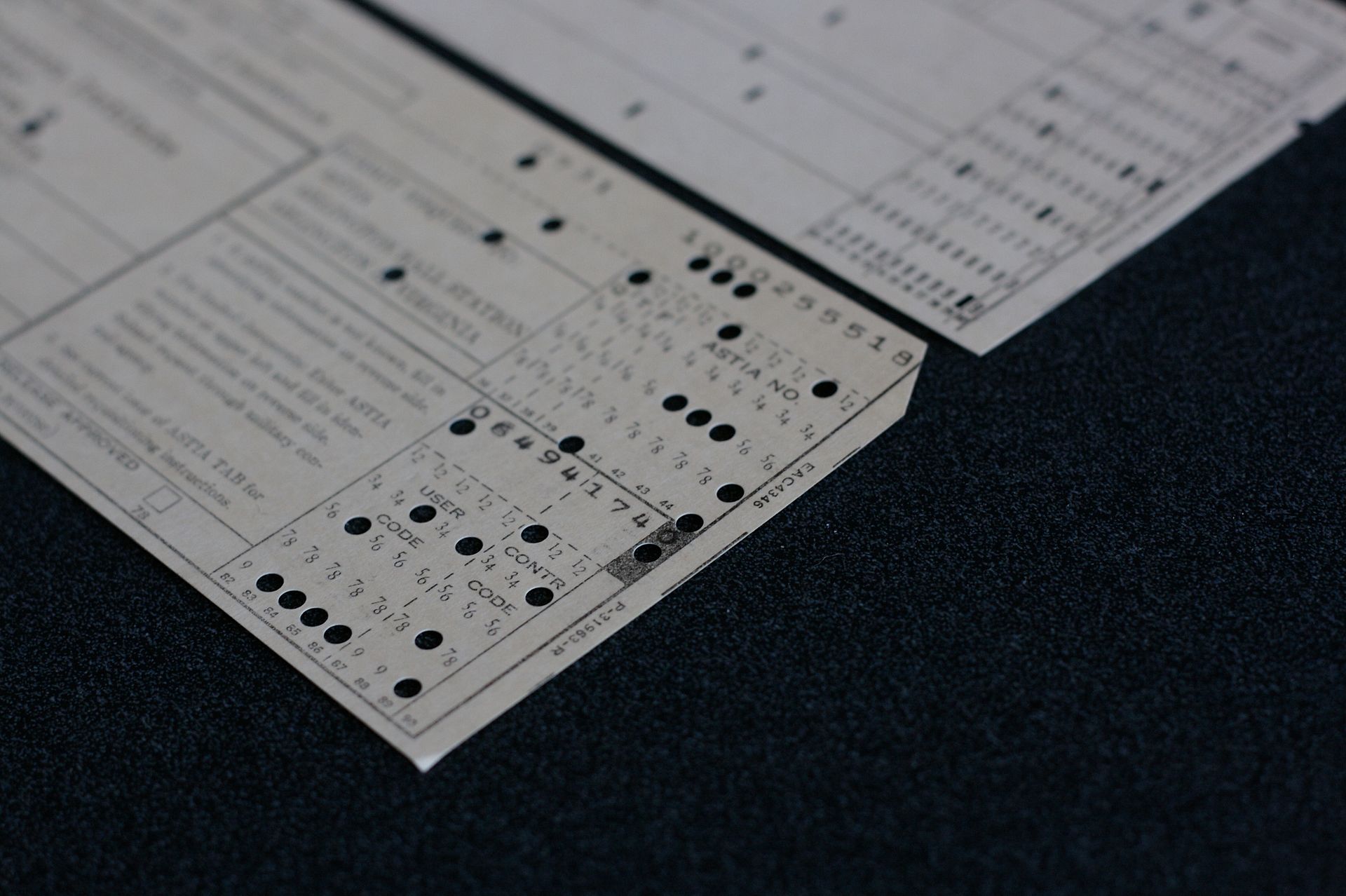

1800s

1800s

1970s

1970s

1980s

1980s

1990s

1990s

late 1990s

late 1990s

2000s

2000s

2029+

2029+

Believe it or not, everything I discuss in this post was reflected in the Jacquard Loom patent in 1804, with a number of the concepts represented in Abacus calculators from the late BC time period.

And no... I don't think the next step is terminator... the hand maiden is way more likely.

The Distortion of Magnitude

Modern computers are unrecognizable when compared to their ancestors, consider the jacquard loom from 1800s, or the punchard machines on the 70s and 80s against a cell phone, laptop computer, or the internet. Let's Compare this in contrast to a similar invention from the 1900s, the automobile. There is one reason why modern computers are seen as completely different from their ancestors, whereas cars are seen as still very similar to cars from the early 1900s. The difference in speed as computers evolved makes the roots of computing unclear.

While the mechanisms of modern computers are virtually the same as their ancestors, the speed and magnitude differences are vast. Most cell-phones have 8 2Ghz processing chips in them. Here's how many calculations a second that is 2,000,000,000. Comparing that with the Ford Model T, which went 42mph in 1915 and the Ford Mustang which only goes around 3 or 4 times that speed today.

A Bunch of Switches

To start, I will illustrate a simple yet infuriating analogy. It's those light switches you never know if they are on or off? I'm talking about the ones that are on both sides of the room and they have to be both up, or both down, to turn on the light. This, believe it or not, is the most basic function of a computer: to send electricity based on the on or off state of two other sources of electricity.

It's how computers answer the question If and also the question Or which are fundamental conditions for most reasoning in the world. It's not only addition that makes a computer work It's the combination of status that gives computers their capability.

Computers Have Four Basic Functions

- Calculate Compare, add/sub, multiply, zero check, etc...

- Set/Copy Place a value held in one place into another place.

- Addressing Locating information by a numerical address.

- Jump/Return Do something new, while remembering where it came from.

This is true of all forms of computing in the last 100 years. And while combinations of these happening several billion times a second make for a lot of patterns and calculations, the mechanisms remain the same.

To extend our lightswitch example, imagine you were outside of your house leaving for the night, and realized you left a light on. You are so far away that you can't tell which light is on, so you call your family member who is home to tell them to switch each light-switch on/off until you can see the light go out.

In this case you are acting as the Zero Check, you don't know which switch to flip, but you know when there are zero lights on. As your family member switches the lights they tell you which one it was and you write it down. Let's say you have a paper with 3 lines on it. In line 1 of your notes, you write down "Porch Light".

The next time you leave for the night you could say "Let's remember to turn off the porch light" or "Let's remember to turn off the light on Line 1 of my notes". The latter is how a computer would do it. That's an example of Set/Copy, Addressing, and a little bit of the purpose of Jump/Return. After looking at line 1 of your notes, you know to return to the task of ensuring the lights are off. This is how computer programming manages to run programs (at a high level).

The most important points are:

- You did not know which light it was until you saw zero lights.

- You wrote down which light to look up later.

- You knew where to look in your notes.

- After looking at your notes you returned to what you were doing.

Up Next

In future blog posts I intend to follow this analogy into programming languages and human-computer interaction explanations. Such as: how the keyboard and mouse motions show up on your screen, what a browser does between requesting the website and laying out the content, but most importantly to convey an understanding about how a computer never really leaves the realm of common sense.